About me

I am a PHD student at LIP6 laboratory, located at Sorbonne Université, Paris, France. My thesis, conducted under the supervision of Alix Munier and Adrien Cassagne focuses on "Modeling and optimization of execution frameworks for deep neural networks on heterogeneous architectures: memory management, energy efficiency, and parallelism".

My research interests are relative to architecture-algorithm adequacy in the context of deep learning inference, targetting modern systems (HPC environments or embedded systems) with respect to critical metrics such as energy consumption, inference latency or throughput.

Education

PhD in Computer Science (1st year)

Sorbonne Université, funded by ENS de Lyon. 2025-2028

MS and BS in Computer Science

École Normale Supérieure de Lyon. 2021-2025

Interests

Inproceedings (2)

Poster (1)

Efficient Scheduling of Transformers using StarPU

For the first half of my last year at ENS de Lyon, I worked as a research intern at Inria Bordeaux in the TOPAL team (HPC).

By using the framework NNTile, I studied how to efficiently schedule the elementary operations of the attention layer of transformers.

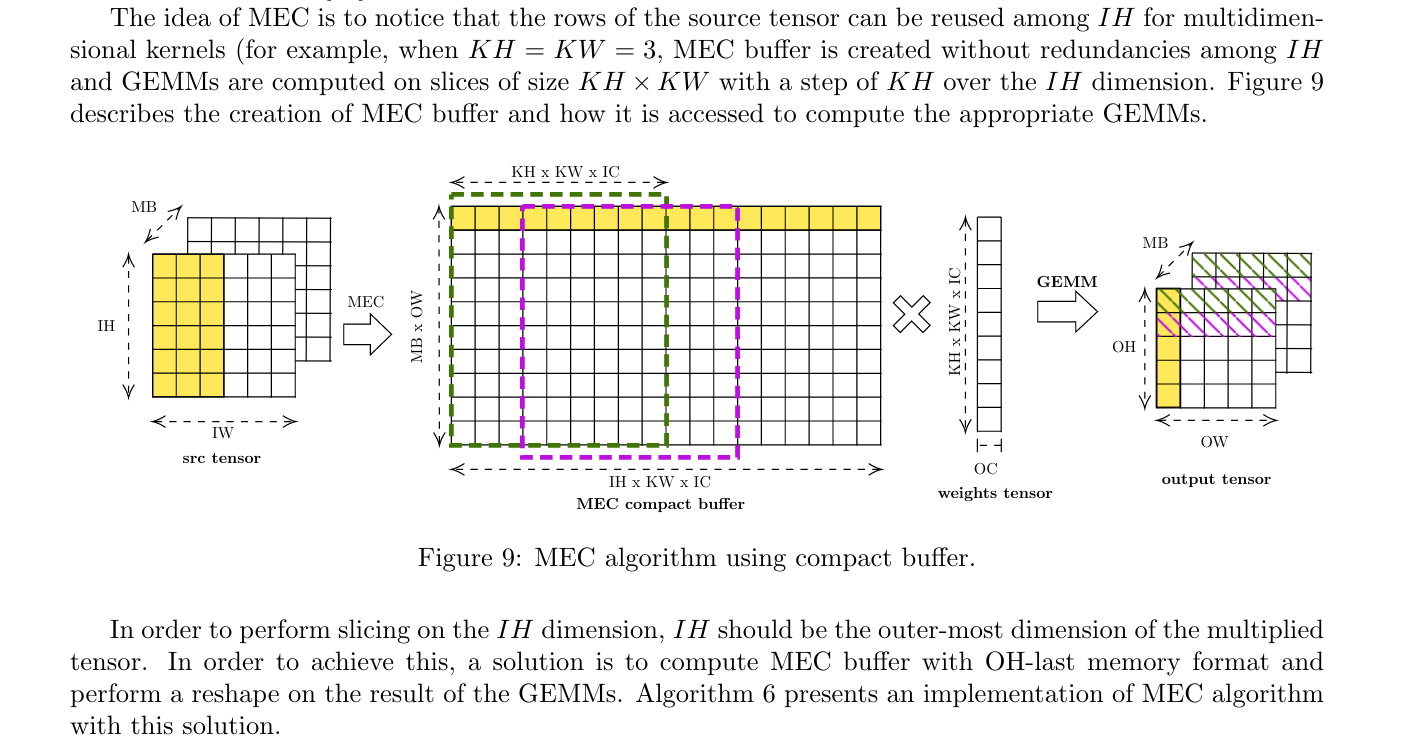

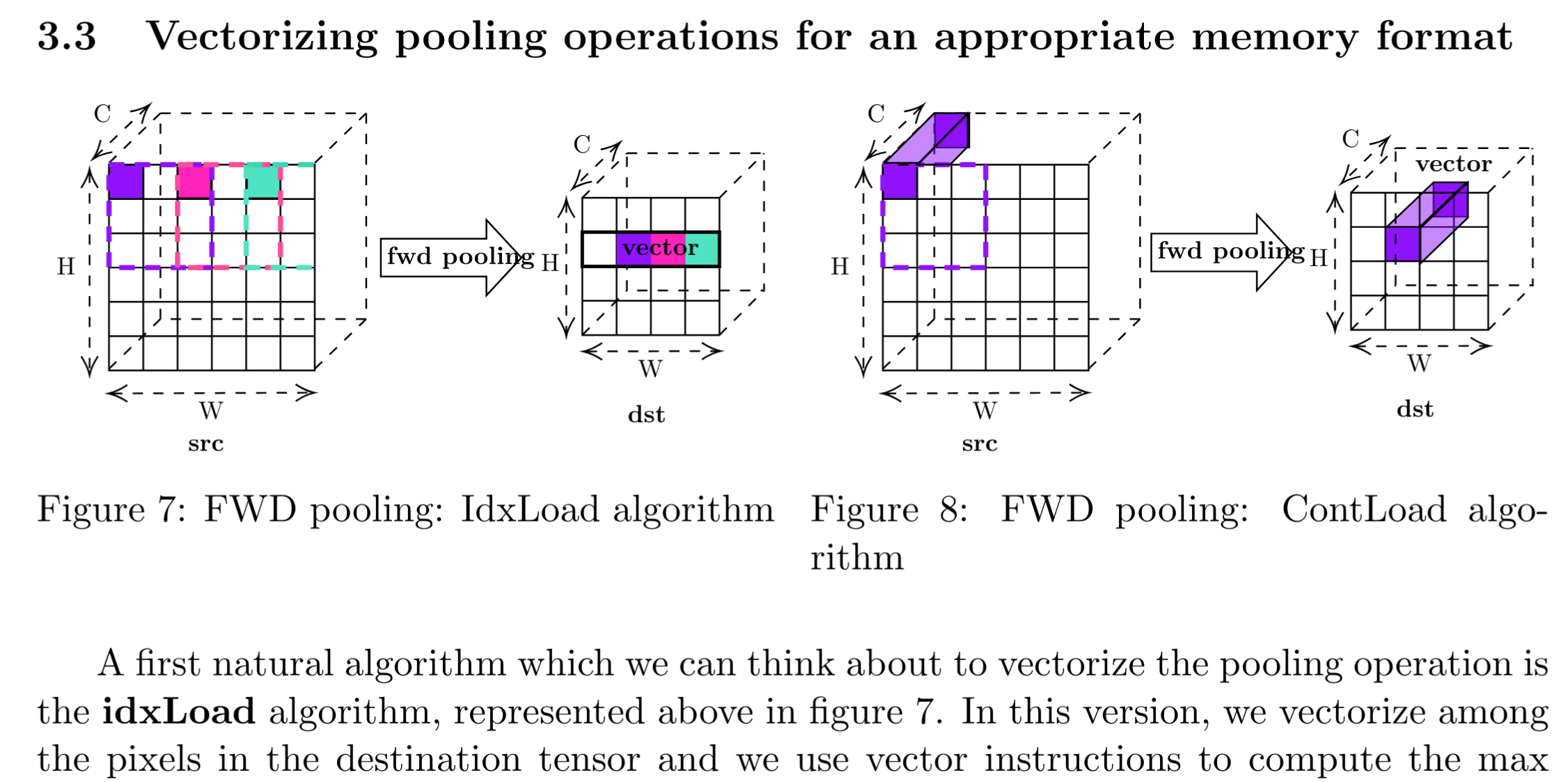

Optimizing Deep Learning Convolutions for Embedded Processors

During my second year of Master's degree, I worked as a research intern at LIP6 (Paris) for 5 months in the ALSOC team (embedded systems).

During this internship, I studied time and performance efficiency of the 4 most used methods for computing deep learning convolutions.

Efficient Execution of Deep Learning Kernels on Long Vector Machines

During my first year of Master's degree, I worked as a research intern at Barcelona Supercomputing Center (BSC) for 3 months in the SONAR team.

The goal of this internship was to optimize deep learning kernels targeting long vector architectures.

DrawCaml: an Object-Oriented graphical module for OCaml

DrawCaml is a graphical module for OCaml, built for Unix architectures. It lays on X11 library, and is developed using C++.

Our motivation was to make easier the creation of graphical applications with OCaml, by using Object Oriented part of OCaml.

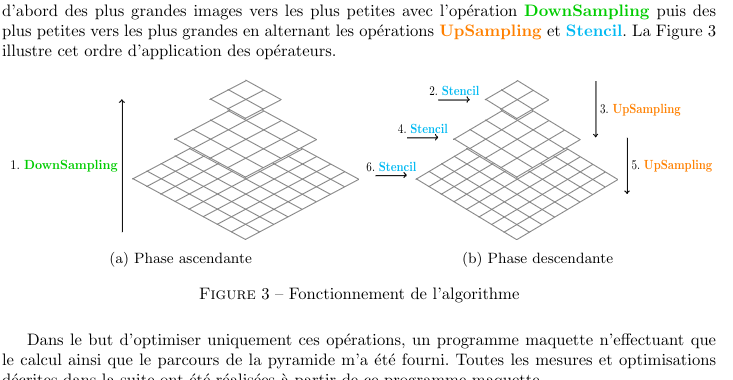

Parallel Optimization of a Pyramidal Computer Vision Algorithm

I spent my Bachelor's degree internship with the ALSOC team of LIP6 laboratory (Paris). There, I studied the optimisation of the parallel execution of pyramidal computer vision algorithms.

These algorithms are widely used in image processing and are known to be very costly, so their optimisation is critical.

Alongside my PhD, I contribute to teaching undergraduate and master’s-level courses at Sorbonne Université (UFR d'Ingénierie 919).

UM5IN160 - Parallelism and Accelerators for Cluster Computing (S1-M2)

This class introduces several programming paradigms for developing modern high-performance applications: SIMD (AVX-512, MIPP), OpenMP, MPI, Dataflow (StreamPU), GPU (CUDA)

UL2IN005 - Discrete Mathematics (S1-L2)

This course introduces the mathematical foundations underlying computer science, focusing on induction, computation models, automata and formal languages, program termination and recursion, and the basics of propositional logic.

UL2IN003 - Introduction to algorithms (S2-L2)

This course covers program termination and complexity analysis, along with fundamental data structures and techniques such as sorting algorithms, trees, and graphs.